Let’s start this introduction with Internet web applications. Such web applications are normally deployed in so-called DMZ network zones because they cannot reside neither in the Intranet network zone nor in the plain Internet. Associated services in front of such web applications like reverse proxies, load balancers, but also web application firewalls (WAF) and web access management (WAM) infrastructures can be found here.

However, a DMZ is also the place for hosting other services and infrastructures like external facing e-mail servers, domain name servers or also termination points for external VPNs. The following strives to provide some background in order to promote understanding of the important role of a DMZ as part of a holistic Internet Security Architecture.

Military origins: “De-Militarized Zone”

The acronym DMZ is an abbreviation for “de-militarized zone”, which stands for a special area between front lines in a war or conflict. The term “no-man’s land” is also often used as it describes a deadly no-go area. However, that is only the worst case. In the best case it describes an area with defined rules and procedures how to securely pass across the front lines, just like the NNSC camp near Panmunjom, between the heavily fortified north and south inter-Korean border.

The NNSC camp contains the famous blue barracks from the UN which are located in the middle of the zone. They are accessible by both sides while each border is managed individually by each party. The DMZ harbors also a number of permanent Swiss and Swedish residents. They offer their neutral services to both belligerent camps since more than 50 years. These residents maintain strict processes and procedures which are respected by both sides – and which makes this tiny little zone probably the safest part on this highly dangerous border.

This as a hint that a DMZ depends heavily not only on setup but also on its purpose. Some might resemble the previously mentioned analogy to the area between front lines where you would fear for your life, but some could also be a highly trusted security zone where you would not hesitate to deposit your important valuables.

Purpose, configuration and usage defines a DMZ

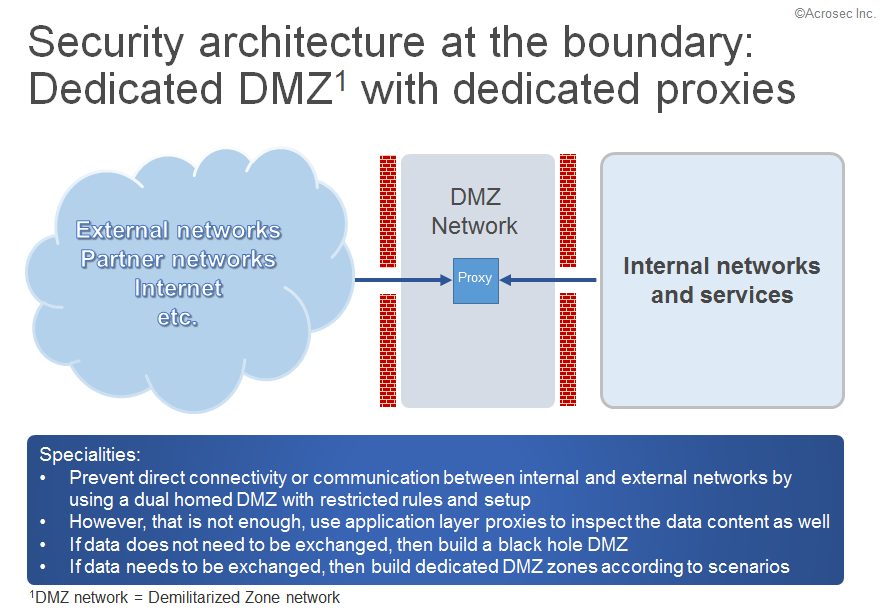

The analogous setup of the Panmunjom DMZ in IT terms is the so-called “dual homed DMZ”; a dedicated network zone hosting some servers which are sandwiched by two firewalls north and south, usually placed between other network zones of different trust levels.

The usual and obvious way to look at such a network DMZ is the technical perspective. Naturally, as it is a technical subject belonging to network administration and to some security guys. It is also a subject full of pitfalls as many technical details need to be considered from multiple angles. Tiny details can make a huge difference between security failure and success. However, there are many more ways how to look at such DMZ network zones.

It is purpose, configuration and usage which defines if a DMZ is a dangerous no-go area or a trusted security zone.

What defines the character of a DMZ?

- Purpose

- Current configuration

- Actual use of it as a matter of fact (i.e. reality, regardless of purpose and configuration)

- Degree of stakeholder expectation match (i.e. or level of illusion)

These characterizations can translate directly into failure or success of such an infrastructure, therefore, too important a subject to leave it to network administration alone. The business perspective – or better said the enterprise architecture perspective – needs to be considered in order to satisfy the expectations of all stakeholders.

Basic concept of a DMZ

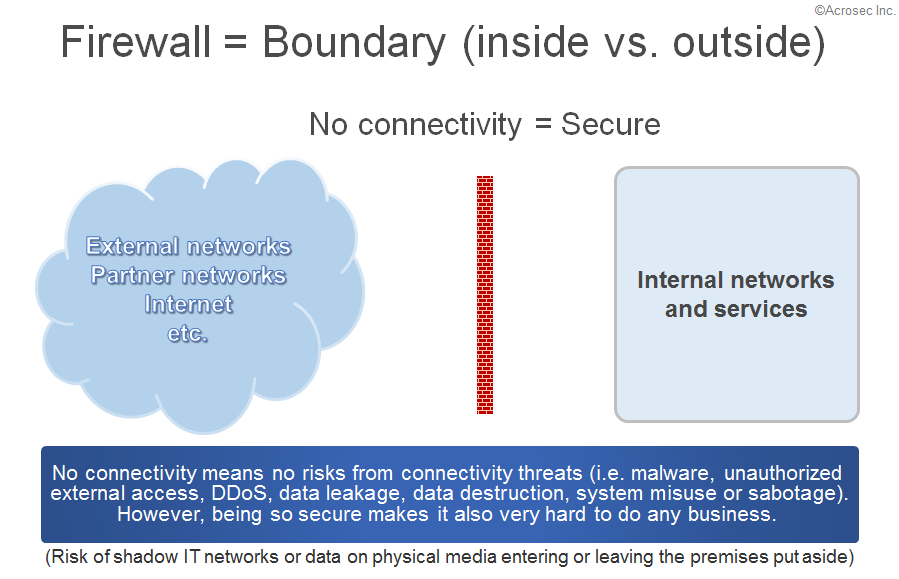

DMZ network zones exist because of the need to communicate between networks of different trust levels. No such communication needs, no need to have such a DMZ.

No connectivity means that there are no risks from connectivity threats (i.e. malware, unauthorized external access, DDoS, data leakage, data destruction, system misuse or sabotage). Other risks still exist of course, like the risks of a shadow IT network put in place unofficially or data on physical media entering or leaving the premises. Putting such risks aside, you are having a very secure environment if there is no networked connectivity at all.

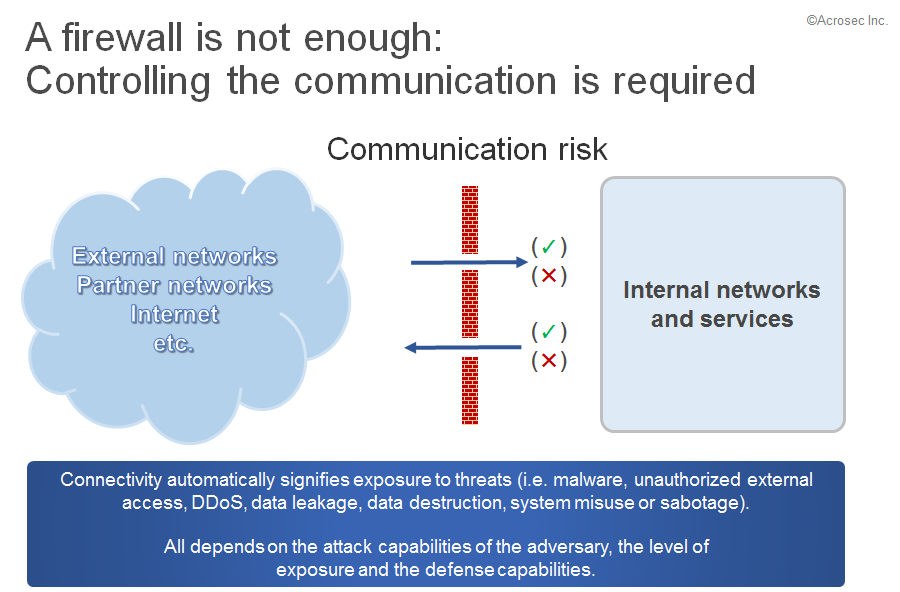

Nevertheless, being so secure makes it also very hard to do any business. Opening up the firewall is inevitable and having connectivity inevitably means exposure to such threats. The risk level depends on the level of exposure, attack capabilities of the adversary, defense capabilities, and last but not least on the cost-benefit calculation of both sides (yes, the attacker also thinks in cost-benefit calculation terms).

Security depends on the implementation scenario and you even might get burned with seemingly correct setup and seemingly correct firewall rules. Connection establishment and data flow are in some cases independent factors, which you can only judge if you know protocol capabilities and what is actually implemented as server behavior.

Questions for inbound

|

Policies to consider

|

These sound like some innocent considerations, however, inbound connectivity is the starting point of a plethora of additional questions. Can such servers be placed together if they are supposed to be accessed from externally? What about accessing them from internally as well? How to treat service invocation calls coming from such servers, e.g. from the web application to the service or the DB layer? Where should these secondary servers (e.g. DB) be placed, anyway? What is the difference between an authenticated user on a customer application and an unauthenticated user on a public web application? What is the correct authentication level? How to authenticate machines and how would that compare to users? How to implement secure systems administration?

Many additional policy considerations are needed in order to decide on further access to the internal network. Furthermore, additional watchdog services are required (i.e. authorization, monitoring etc.) on the boundary and within the internal network, where it would matter. And so on.

Similar considerations apply for outbound connectivity, although the concern is now far more about data security, especially regarding data leakage.

Questions for outbound

|

Policies to consider

|

Accepting connectivity is like opening a Pandora box – however – if ever there was a decision moment it is most probably already gone and part of prehistoric history in most firms and organizations. What matters now is to find the acceptable balance between risk exposure and security investments in order to sleep well at night.

DMZ Security Architecture as security vehicle

Obviously, servers which enable such communication would be placed in a dedicated network zone – a DMZ. However, a comprehensive IT Security Architecture is recommended in order to deal with the communication risks.

Everything starts with the premise to prevent direct connectivity or communication between internal and external networks by using a DMZ architecture with restricted rules and setup. Ideally, there would be two firewalls as internal and external boundary. The DMZ would harbor the servers which should be accessible from both sides.

Ideally, no connection will be initiated from the DMZ, anyone can access it but only from one direction. Just think of it as a black hole. However, just as Hawking postulates, a black hole is not always one way but it is also possible to get something out of it. The same for a DMZ; connections can be kept open in order to grab desired data, all while respecting the initial policy not to allow services in a DMZ which would start connection activities on their own.

Furthermore, these DMZ servers need to be protected. Leaving them alone is not an option as anything connected directly to the Internet is highly exposed and at risk. Constant monitoring and also a secure way of server maintenance will be required.

The proxy service in the DMZ makes the difference

Some data still need to pass through the firewall, depending on scenario. So, we need more control. However, which data packet is okay, which is not? Some application layer knowledge is required in order to be able to inspect the content payload in the data packet.

That is where the purpose of the DMZ becomes important. There is no such thing as all-purpose data inspection service which would be considered as good enough for all thinkable scenarios. Next generation firewalls are often sold with the argument that they provide deep packet inspection. A good thing, theoretically. However, it is an easy feature to turn on but a feature hard to maintain over time.

The behavior of such a firewall will be guided by myriads of background rules, maybe updated automatically from the vendor. This makes it error prone and difficult to maintain if the enterprise IT is complex. A serious business cannot leave it to IT hazards if their services must be available. Separation of concerns is a good and manageable alternative in order to prevent chaos.

That is why the deep packet inspection feature is rarely used intensively on next generation firewalls. However, deep packet inspection matters more in dedicated scenarios, which is often implemented with specialized proxy solutions close enough to the application – in terms of IT layer but also in terms of similar IT personnel.

A Web Application Firewall (WAF) is such a dedicated proxy solution for web applications. All incoming web traffic for the application is routed and filtered through this security device. In the best case, the application owner can actively make use of the WAF features for setting up a rock solid web application security.

A DMZ Security Architecture needs to be designed depending on the individual needs of a firm or organization. However, there are typical scenarios which might be used as templates in order to help implementing such a Security Architecture at the boundary. Read more: Dedicated DMZs – Security Architecture blueprints.

Technical implementation details

There are many ways how to implement a DMZ. Following details should be considered when building a DMZ:

- Choose between a dual homed or single homed DMZ design

- Design the number of network segments in a DMZ, e.g. external facing network, internal facing network, additional networks for dedicated purpose

- Design how to do systems management of DMZ infrastructure and application servers, e.g. via dedicated management interfaces

- How many switches to use (shared hardware for external and internal segments is a security risk)

- Decide which DMZ elements to virtualize and what to have physical

- Which network or infrastructure services to implemented separately (reusing internal DNS, AD etc. is a security risk)

- Design policies of how to use the DMZ and clarify ownership responsibilities

- Design change management procedures and oversight responsibilities for the DMZ

- etc.

Note: This list is not exhausting.

Note: A dual homed DMZ design has 2 firewalls (external and internal, all DMZ pictures here have been created with this scenario in mind). Single homed DMZ designs have only 1 firewall (Quick and cheap approach, higher risk of exposure).

Author: Roberto Di Paolo

2016/02/05 © ACROSEC Inc./last update 2018/07/05